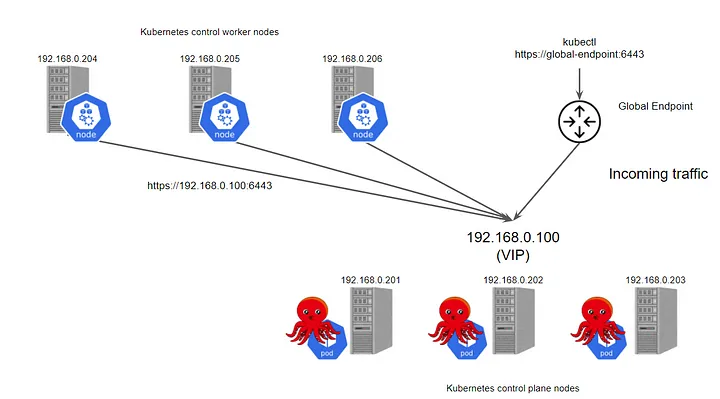

裸金属环境下的高可用Kubernetes集群,目前主要有kube-vip和haproxy+keepalived两种方案,kube-vip对物理机的数量需求比较少,配置也相对简单,所以更受欢迎。

搭建集群需要6台x64机器,操作系统为debian 12。每台机器的最小配置是2个CPU、4G内存、500G硬盘。当前的kubernetes版本为1.28.4。kube-vip方案至少需要3台控制平面主机。

6台机器的主机名和IP为:

master1 192.168.234.11

master2 192.168.234.12

master3 192.168.234.13

worker1 192.168.234.21

worker2 192.168.234.22

worker3 192.168.234.23高可用的虚拟IP为192.168.234.30

- 首先在每台主机上运行

apt update

apt upgrade把下列脚本保存到名为master.sh的文件中

#!/bin/bash

# setup timezone

echo "[TASK 0] Set timezone"

timedatectl set-timezone Asia/Shanghai

apt-get install -y ntpdate >/dev/null 2>&1

ntpdate ntp.aliyun.com

echo "[TASK 1] Disable and turn off SWAP"

sed -i '/swap/d' /etc/fstab

swapoff -a

echo "[TASK 2] Stop and Disable firewall"

systemctl disable --now ufw >/dev/null 2>&1

echo "[TASK 3] Enable and Load Kernel modules"

cat >>/etc/modules-load.d/containerd.conf <<EOF

overlay

br_netfilter

EOF

modprobe overlay

modprobe br_netfilter

echo "[TASK 4] Add Kernel settings"

cat >>/etc/sysctl.d/kubernetes.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system >/dev/null 2>&1

echo "[TASK 5] Install containerd runtime"

apt-get update

apt-get install ca-certificates curl gnupg jq

install -m 0755 -d /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/debian/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

chmod a+r /etc/apt/keyrings/docker.gpg

echo \

"deb [arch="$(dpkg --print-architecture)" signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/debian \

"$(. /etc/os-release && echo "$VERSION_CODENAME")" stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

apt -qq update >/dev/null 2>&1

apt install -qq -y containerd.io >/dev/null 2>&1

containerd config default | \

sed -e 's,SystemdCgroup = .*,SystemdCgroup = true,' | \

sed -e 's,registry.k8s.io/pause:3.6,registry.aliyuncs.com/google_containers/pause:3.9,' | \

sudo tee /etc/containerd/config.toml

systemctl restart containerd

systemctl enable containerd >/dev/null 2>&1

echo "[TASK 6] Add apt repo for kubernetes"

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.28/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.28/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

apt-get update >/dev/null 2>&1

echo "[TASK 7] Install Kubernetes components (kubeadm, kubelet and kubectl)"

apt install -qq -y kubeadm kubelet kubectl >/dev/null 2>&1在每台主机上用sudo bash master.sh命令运行上述脚本,完成kubeadm kubelet kubectl的安装。

可以用下列命令检查安装的版本

kubeadm version

kubelet --version

kubectl version2. 在master1主机上,拉取镜像文件

sudo kubeadm config images pull --image-repository=registry.aliyuncs.com/google_containers3. 创建kube-vip静态pod,采用arp模式,在master1主机上运行

export VIP=192.168.234.30

export INTERFACE=ens33

KVVERSION=$(curl -sL https://api.github.com/repos/kube-vip/kube-vip/releases | jq -r ".[0].name")

alias kube-vip="ctr image pull ghcr.io/kube-vip/kube-vip:$KVVERSION; ctr run --rm --net-host ghcr.io/kube-vip/kube-vip:$KVVERSION vip /kube-vip"

kube-vip manifest pod \

--interface $INTERFACE \

--address $VIP \

--controlplane \

--services \

--arp \

--leaderElection | tee /etc/kubernetes/manifests/kube-vip.yaml其中ens33是主机的网卡接口名称,请按照实际情况修改。

4. 接下来初始化节点,在master1上运行

kubeadm init --image-repository registry.aliyuncs.com/google_containers --control-plane-endpoint=192.168.234.30:6443 --upload-certs192.168.234.30是高可用虚拟IP的地址,根据实际情况修改。

在master1主机上运行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config5. 在master1主机上运行,生成join命令

echo $(kubeadm token create --print-join-command) --control-plane --certificate-key $(kubeadm init phase upload-certs --upload-certs | sed -n '3p')在master2和master3主机上,运行上述命令生成的join命令。

在master2和master3主机上,运行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config上面是master主机的加入过程,接下来把worker主机加入集群

在master1上运行,生成join命令

kubeadm token create --print-join-command在每台worker主机上,运行上述命令生成的join命令,加入集群。

在master主机上,可以用kubectl get node命令,看到加入的6台主机。

把master1主机上的/etc/kubernetes/manifests/kube-vip.yaml文件,复制到master2和master3主机,在三台master主机上运行下列命令生效

systemctl restart kubelet6. 接下来安装CNI,Cilium具有强大的安全特性和监控观察能力,所以选用Cilium。在master1上运行下列命令

CILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/main/stable.txt)

CLI_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

sha256sum --check cilium-linux-${CLI_ARCH}.tar.gz.sha256sum

sudo tar xzvfC cilium-linux-${CLI_ARCH}.tar.gz /usr/local/bin

rm cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

cilium install

cilium status --wait7. 安装metallb负载均衡控制器,metallb是在裸金属环境下实现Loadbalance Service的常用组件,在master1上运行

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.14.3/config/manifests/metallb-native.yaml为负载均衡服务配置动态分配地址池,采用二层模式,把下列内容保存到文件ippool.yaml

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: first-pool

namespace: metallb-system

spec:

addresses:

- 192.168.234.80-192.168.234.120

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: metallb-l2-adv

namespace: metallb-system运行kubectl apply -f ippool.yaml 生效 ,其中192.168.234.80-192.168.234.120是地址池分配范围,按照实际情况修改。

8. 接着安装ingress控制器,在master1上运行

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.10.0/deploy/static/provider/cloud/deploy.yaml9.安装cert-manager证书管理器,在master1上运行

kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.14.4/cert-manager.yaml

经过上述步骤,安装就基本完成了。

可以运行kubectl get pod -A 来观察集群中各类系统pod的运行情况,发现异常状况,,及时排除。

参考:

https://learn-k8s-from-scratch.readthedocs.io/en/latest/k8s-install/kubeadm-cn.html

https://www.ebanban.com/?p=967

https://metallb.universe.tf/configuration/_advanced_l2_configuration/

https://kube-vip.io/docs/installation/static/

https://kubernetes.github.io/ingress-nginx/deploy/baremetal/

https://cert-manager.io/docs/installation/kubectl/

https://docs.cilium.io/en/stable/gettingstarted/k8s-install-default/#k8s-install-quick